I have the pleasure of participating at the Warm Crocodile Conference held by Microsoft in Copenhagen these days along with a couple of colleagues from ScanJour A/S. First thing to mention from this conference is that the venue is rather unusual, namely being held in an “apartment hotel”, which means that the sessions are taking place in big apartments, and in one of the sessions I participated in today, approx. half of the audience had to stand. I might becoming old, but I certainly prefer a good old-fashioned auditorium with nice soft seats

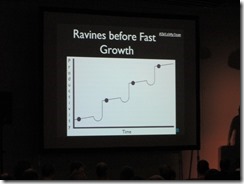

Enough of that, but let’s focus on the presentations, which so far has been good. The subject of the keynote by Roy Osherove this morning was “Ravine”, i.e. the progress of learning, as illustrated by the following slide.

Keynote: Ravines of learning

The talk both had a professional as well as a personal view, i.e. how we professionally develop our skills as well as how having a child also results in a need for acquiring new skills on a personal level.

The slide above shows the situation where we experience a decrease in productivity e.g. when having to learn new skills or technologies, but after a while the net effect should be an increase in productivity. But an important point here is also that you need to get out of your “comfort-zone” now and then, in order to increase your personal knowledge and productivity. If you stay working on your regular tasks, you will not improve.

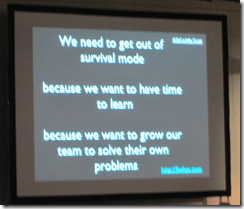

Then he talked about “survival mode” (or “fire-fighting” is how I usually name this state) where you/the team are rushing behind tasks, and often or always have to cut corners, typically on quality, in order to meet the deadlines. I apologize the bad quality of the left picture, but I was standing in the back of the room (again, it was surprisingly primitive conditions at the conference, but the content of the talk was good)

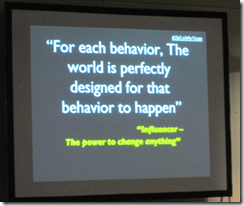

You need to be aware when you are in “survival mode” and try to get out of it. Especially if your are lead for a team, you must get the team out of the survival mode as fast as possible, if you identify it has happened. The right slide says that there is always a reason/root cause for a behavior, i.e. nothing simply happens by accident, when you investigate the issue further.

Last part of the talk was about changing behavior, which consists of the following three aspects:

- Personal

- Social

- Environmental

Changing behavior on a personal level might be by having regular 1-1 talks, reviews, followups etc. with the person. On the social level it can be to gather a group of people to watch videos or otherwise introduce new ideas into the group and finally the environmental aspect can be e.g. changing bonus/rewards that’s not encouraging the desired behavior.

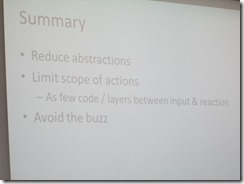

A different view on layered architecture by Ayende Rahien

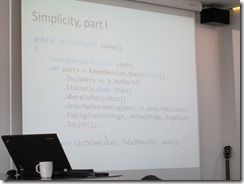

Next I went to the talk by Ayende, author of/involved in e.g. NHibernate and RavenDB, on layered architecture and especially on reducing the number of layers when possible. The purpose of introducing layers in your software system is normally to abstract lower parts of the system, and thereby easing the use of those levels and generally make the overall system more maintainable. I’ve seen (and unfortunately also written myself) layers that rather just introduces additional overhead/deadweight to the system, e.g. simple database calls etc.

The talk started out with a historical tour of software development back from end of the 1970ies where computers was mainly standalone systems with limited need for any layers. But as e.g. networks prevailed, a need for abstracting e.g. data access and business logic layers was introduced. The point of the talk was that we should not blindly implement layers and abstractions, just because we can, but ensure that any layer is actually providing a sound abstraction and overall value to the system.

The left slide below shows actual code from Ayendes blog (based on RavenDB), where instead of having a dedicated repository for getting the blog posts, he simply queries them using LINQ, since there is almost none logic involved expect the call to WhereIsPublicPost() extension method. The right slide gives examples of abstractions, with a note to limit the number.

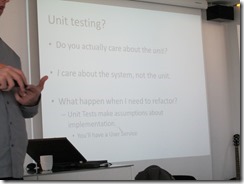

Then the talk went on to unit testing vs. integration/functional testing, where Ayende was advocating for focusing on testing the full system, instead of focusing on unit tests which might just “test the abstraction”. E.g. by using an in-memory database, you can still run your integration tests fast and isolated. As I’m primarily working with test, I certainly see the point of focusing on testing the full system. After all our goal is to have a working system, not just passing unit tests. Nevertheless unit tests does “raise the bar” of what needs to be either tested by integration tests or even manually, but we should of course make sure that the unit tests are actually providing value.

Introduction to Twitter, Facebook and LinkedIn APIs using OAuth

Anne-Sofie Nielsen gave a “Tour de API” of how to access Twitter, Facebook and LinkedIn using OAuth. Unfortunately the light in that room wasn’t good for taking pictures, but her presentation can be found at http://prezi.com/fcyng34ox56t/tour-de-api/. It was an interesting introduction into integrating with social networks, and it’s not unlikely that our case and document management system in some situations can use this.

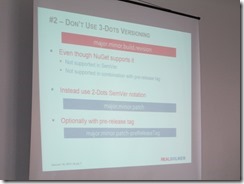

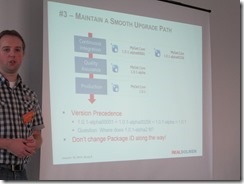

NuGet

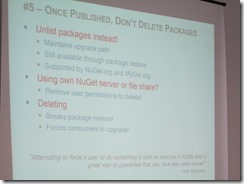

After lunch, I went to the first of two talks on NuGet today held by the same speaker. As I see it, we actually started out with the “advanced” session first and closing the day off with the more introductory talk at the end of the day. Nevertheless the two talks in combination has given me valuable input on how to start using NuGet for helping managing dependencies between our internal automation frameworks. In his first talk he had a number of “advices”, from which I found the following most relevant. Especially the slide on version number schema, which has given me some challenges, as our binaries build by Team Foundation Server has the 3-dots format.

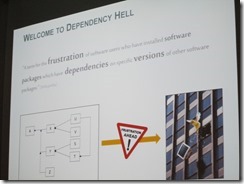

Breaking the chronological order here, but as we’re on the subject, I’ll move right on the introduction session on NuGet. From this I found the following three slides informative, describing symptoms of problems in your overall build infrastructure and the term “dependency hell”, which by no mean is new to me.

I even found this MSDN article from 2001 (!) describing the “DLL Hell” as we used to call it back then in the COM days. .NET has reduced much of the pain related to this, but there is certainly room for a tool like NuGet to help manage these dependencies.

Unit Testing Good Practices & Horrible Mistakes

Roy, who gave the keynote this morning, also had two sessions on unit testing, where I participated in the last one on best practices (apparently along with half of all conference participants, as most of us had to stand up – this explains the bad image quality on some of the pictures – you get tired in your arms after standing up for longer time  )

)

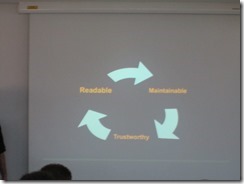

Good unit tests (and automated tests in general, I would say) should be trustworthy, i.e. doesn’t fail periodically, be maintainable and readable. As the right-most slide shows, this is linked together in the sense that tests not being easily readable are harder to maintain and therefore also likely not to be trusted in longer run.

Characteristics of unit tests is that they

- Has fast execution time

- Executes in-memory

- Has full control over the “test context”, e.g. input values

- Fake dependencies

If a test does not comply with this, then it’s an integration test (and he mentioned several times that unit and integration tests should be placed in different projects, again to help maintainability)

Some other very valuable points from his talk was:

- Have a naming convention for your tests. His suggestion is [UnitOfWork_StateUnderTest_ExpectedBehavior] which he has also described at http://osherove.com/blog/2005/4/3/naming-standards-for-unit-tests.html

- Avoid multiple asserts against different objects in a test. The problem is that if the first assert fails, you will never know if the other object(s) failed or passed their tests. Instead this should be separated into different tests

- Don’t fake database tests, but perform these as integration tests instead running against the database

- Max one fake/mock object per test, and preferably avoid using fakes in a test, as this gives internal dependencies on your actual implementation, which might reduce maintainability of both test and production code

Roy has written the book The Art of Unit Testing, which most likely contains all the above advices plus a lot more.

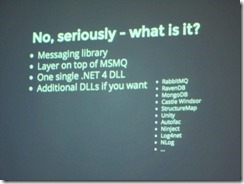

Implemeting loosely coupled software systems using Rebus

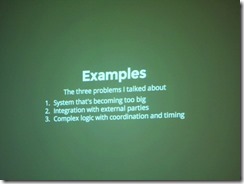

This talk was by the author of Rebus who gave a live presentation of implementing a messaging based system based on this free open-source message queue/bus, that can handle exchanging messages between different components in a software system.

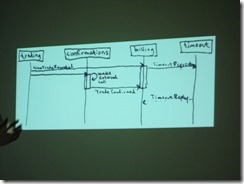

With help from some pre-prepared code and code-snippets we almost ended up with an implementation of the following system within the one hour presentation, which also contains the mechanics for handling messages that times out.

Message queuing is certainly not a new technology, but Rebus seems at first sight to be a very useful implementation that can be used to help componentize/modularize a system (rather than developing a big monolithic system).

Despite the rather unusual venue place, it has been a good first conference day with nothing but interesting and motivating speakers and topics.

And not least I won a free copy of the “Pro NuGet” book written by the speaker of the two NuGet session. I’m very much looking forward to reading this book