Last time I read a “The Pragmatic Programmer” series book was “Ship It!: A Practical Guide to Successful Software Projects” some years back, which I remember as being a well-written practical oriented book on developing software. I spent some time in the Christmas holiday reading “Lean from the Trenches: Managing Large-Scale Projects with Kanban” which is also a short, extremely well-written but still comprehensive book describing the authors experiences from building a nation-wide software system for the Swedish police.

Being a tester, I especially found the sections on how they did test and quality assurance on the project interesting. Along with my earlier experiences, this book confirms me that test is becoming an integrated part of software development, and that the role of the tester in future is moving towards more quality assurance, rather than primarily having a focus on executing tests. I still believe there is a future for execution of exploratory tests in future, but scripted tests seems in many cases to be best handled by the developers, even functional tests.

Minimum Viable Product or “How to slice the elephant”

The book starts out on page 6 by describing how they divided the system into small deliverables, in this case

- Start by deploying the system to one small region

- First version only supports a small amount of case types, rest is handled the old way

- Having the customer in-house

A few simple rules, but as the rest of the book shows, they play a very important role in order to ensure a successful project.

Cause-Effect Diagrams

First part of the book describes the project and processes on a more overall level, whereas the last third of the book goes into more details on the techniques used by the team. One of the chapters in this section contains an introduction on getting started with Cause-Effect Diagrams/Analysis, for which the book describes the following basic process (page 134):

1. Select a problem – anything that’s bothering you – and write it down

2. Trace “upward” to figure out the business consequences , the “visible damage” that your problem is causing

3. Trace “downward” to find the root cause (or causes)

4. Identify and highlight vicious cycles (circular paths)

5. Iterate these steps a few times to refine and clarify your diagram

6. Decide which root causes to address and how (that is, which countermeasures to implement)

One of the examples from the book is “Defects Released to Production” from which the problem “Angry Customers” is identified as well as “Lack of Tools and Training in Test Automation” as a root Cause, as shown in the following example:

Taken from page 139 although the diagram in the book is more comprehensive, e.g. by identifying multiple root causes. In relation to Cause-Effect Analysis, the book says on page 52 that

“Bugs in your product are a symptom of bugs in your process”

Which is another way to phrase the well-known statement that you cannot test quality into a product, but needs to be an integrated part of the software development process.

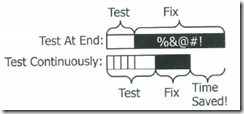

From a testing perspective, this book contains a lot of useful information, e.g. chapter 9 “Handling Bugs” visualizes how “continuous testing” helps minimizing the total time spent on testing and bug-fixing, by having bugs fixed as early as possible. It’s been a long time since I’ve met a team only doing test at the end of a release cycle, but still the following figures shows how we can benefit from having “continuous testing”, in order to reduce total cost of developing software.

Traditional waterfall approach:

Using continuous testing:

Even though we spend more time on testing, it pays off by lowering the time spent on bugfixing:

Limit to 30 active bugs

Another interesting idea from this chapter is to set a WIP (Work in Progress) limit on 30 bugs. Bugs that don’t make it into the top-30, are automatically marked as “deferred” immediately and won’t be fixed. If a bug is found important, one of the existing 30 bugs is removed from the list. This is an effective way of limiting the number of bugs, which I think also helps on keeping focus on fixing bugs immediately (even without necessarily reporting them), since you don’t have the option of just piling the bug along the existing probably several hundreds of existing reported bugs. You easily get “bug-blind” when you have reported more than 50-100 bugs in your system, and setting a hard limit to e.g. 30 seems to be a good way to mitigate this issue.

Reducing the test automation backlog

Chapter 18 is dedicated to describing how to handle lack of test automation for a legacy product, where the advice is to have the whole team increase the test automation a little each team. It’s important to notice that it should be the whole team, and not e.g. a separate team, as the whole team should learn and be responsible for writing tests. For identifying which test cases to automate first, the book prioritizes the tests by

- How risky the test case is, i.e. will it keep you awake if this test is not executed?

- Cost of executing test manually

- Cost of automating test case

Beside what I’ve described in this review, the book also contains valuable tips on how to organize your scrum boards, standups etc.

In short this is a really good book, which is furthermore quickly read, so I will recommend it to everyone participating in software development projects.