Attended the User Conference on Advanced Automated Testing (UCAAT) 2013 in Paris last week, primarily covering model based testing, which was a good opportunity for me to get an overview of the usage of model based testing in different areas/industries as well as in which direction model based testing is moving. This post will cover what I found to be the highlights from this conference, namely:

- How Spotify uses model based testing to bridge the gab between testers, developers and domain experts

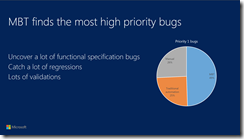

- Microsoft metrics shows that model based tests can be used to find bugs earlier in the development process cycle

- Model based testing is quite well used in automotive and telecommunication industries

- Increasing use of TTCN-3 language to write automated test cases

As it can be seen from the conference program, this conference consisted of a relatively high number of short 20-minutes sessions, which meant that many different areas of model based testing was presented, from Spotify to nuclear and flight safety systems, which meant lots of inputs and inspiration, but also a good social atmosphere at the conference, where you could easily find a speaker and ask into their area. The schedule was probably a little bit too tight (30 mins per session would be better), but my impression is that organizing the sessions this way, resulted in a very dynamic conference, with lots of possibilities for networking.

Improving collaboration using Model Based Testing at Spotify

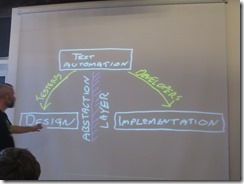

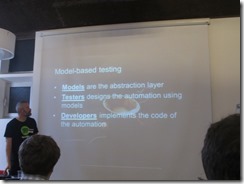

Kristian and Pang from Spotify had a 90-minutes tutorial on their use of Model Based Testing at Spotify using the open source tool GraphWalker, of which Kristian is one of the authors. In contrast to Spec Explorer where models are described in C#, GraphWalker uses a visual designer for modeling, and from their presentation it seems that the model visualizations produced this way are a lot easier to understand people not used to modeling.

And this was also one of the major points of the presentation, i.e. that these models can be used to improve collaboration and communication within the Scrum teams, as the models can be understood easily by all Scrum team members, Product Owner and other stakeholders.

Another very interesting feature of GraphWalker is the ability to combine models, which Spotify uses to let the individual teams build models, which can the be reused by other teams. One example they mentioned, was a model for login, which the "Login-team" could use for extensible testing, whereas other teams depending on this subsystem, would just use it for testing the most common paths through the login process, before entering their own part of the system.

The rise of TTCN-3?

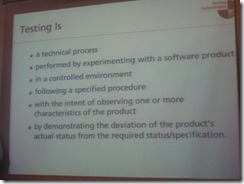

Perhaps due to the fact that I'm not working full-time as a tester these days, I didn't know about the TTCN-3 language before attending the conference. In short TTCN-3 is a language for writing automated test cases, including what seems to be a significant library of helper methods, which was used to demonstrate how to mock a DNS server for testing a DNS query. The following two slides shows the context of testing with TTCN-3, and what I basically got from the presentation and my following talk with the presenter, is that TTCN-3 is “just another” programming language, although designed with testers in mind, i.e. the libraries and structures are targeting the flow of test cases, rather than production code.

I met with the presenter of this tutorial and even though the usage of TTCN-3 seems to be increasing, especially within large companies like Ericsson (who seemed very satisfied using it), I find it hard to understand why we need a new language specifically for testing, also taking into consideration that TTCN-3 isn't particularly human-readable, i.e. accessible to non-technical people. I would rather prefer to make the libraries behind TTCN-3 available for use in other programming languages, but there doesn't seem to be any plans for doing so.

It might make sense to use it in a large organization where you have a critical mass of people writing test scripts, but I personally believe that the automation should be written in the same language as the production code, and TTCN-3 hasn’t changed my mind about this. Using same tools and languages on a project for both production and test code makes it a lot easier to communicate and collaborate between people working in different areas.

Finding bugs early using model based testing at Microsoft

Microsoft gave two very good presentations on use of model based testing for testing Windows Phone, which showed some interesting numbers of bugs found as well as when in the development cycle these were found.

Although I haven’t collected similar statistics from my usage of model based testing, my experience is also that modeling the system helps you find structural/logical bugs during early development phases.

Model based testing at Unity

I also had the pleasure myself to give a presentation at the conference, on our early findings of using MBT for testing Version Control Integration and simple in-game physics of the Unity 3D game engine. The presentation is available here: UCAAT-ModelBasedTesting3DGameEngine-RasmusSelsmark.pdf and was (of course) done as a simple game inside the Unity editor. At least I got several positive comments on the presentation format :)

Overall it has been a good conference. No superstars on this conference (although the guys from Spotify showed some very interesting usages of models for testing), but again many presentations and lots of opportunities to meet and talk with people about how they are using model based testing in their day-to-day work.