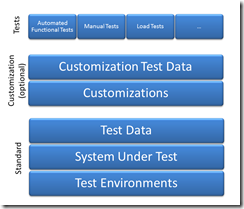

While doing software test automation, I have realized that there is a lot more to automate than just the tests, in order to have test automation run successfully and minimizing maintenance. For testing Captia I see the following layers, which I assume is applicable to other software products as well:

The important point here is that there exists a foundation for your test automation, which must work properly in order to have a stable environment for running test automation. For long time I have been focusing on the Tests layer of the above diagram, whereas we have recently in ScanJour worked on the lower parts of the “test automation eco-system”. Especially Jens Tidemann, who started here in the beginning of the year have pushed this a lot, by starting to develop PowerShell scripts for setting up environments and system under test.

This blog post describes our solution for automating the process of setting up test environments in a uniform way across products.

Use Source Control for storing automation scripts and configuration

All topics described in this post can be automated, and is in our case. As any other test automation, treat this as a regular development project, i.e.

- Plan

- Store all assets (scripts, tools, configuration files etc.) in source control

- Write unit tests when possible for the tools

- Consider backwards compatibility when making changes to the tools. In our case the automation supports different versions of the product, which has been really valuable using it for recreating test environments that was previously setup manually.

As described later, even configurations for setting up customized test environments can be stored in source control.

“Test Environments” layer

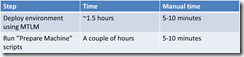

This layer contains setting up and configuring test environments, i.e. the machines for executing either manual or automated tests. In our case we’re running virtual machines/environments using Microsoft TFS Lab Management, but this layer could be based on another virtualization technology or physical machines/devices.

For setting up the raw machines, we’re currently implementing Microsoft Deployment Toolkit which automates the deployment of Windows images with/without additional software packages like Microsoft Office etc. that is required by the System Under Test.

Another part of automating this layer is PowerShell scripts that is able to perform the following tasks:

- Join domain (we’re typically running network isolated environments with own domain controller). This requires user to enter credentials, so this is done as the first step. Rest of the tasks are performed automatically.

- Install general prerequisites, e.g. .NET, IIS

- Install latest Windows Updates (with an XML based “exclude” list containing e.g. IE9, which we don’t want installed)

With this approach the effort for deploying an baseline environment has been reduced to something like 10-20 minutes of manual work. It of course still takes several hours to deploy the environment in TFS Lab Management, but most of it is done automatically, and therefore doesn’t require user interaction.

After environment has been deployed and above steps performed, a “baseline” snapshot is taken which contains:

- Windows OS

- Joined the test domain

- Configured IIS etc.

- Windows Updates

- Microsoft Build Agent in order to do automated deployments from TFS

- Optionally Microsoft Test Agent if used for automated test execution

Whereas the baseline does not contain:

- Active directory users

- Prerequisites for system under test, like database drivers etc.

This way we have environments that can be used for multiple purposes. Be careful what you choose to include in the baseline snapshot, and not to “pollute” it, with components that might lock the environment to only be used for too specific purposes. In our case we’re e.g. not installing Oracle database drivers in the baseline environments, since this would mean that only specific version of Captia can be installed.

“System Under Test” layer (with prerequisites)

With a baseline for the test environments as described above, the product to be tested can be installed on either of the available environments, depending on the the type of test to be performed.

E.g. if just performing a visitation or testing some simple functionality, one of the small environments can be selected. Alternatively if testing more complex scenarios, there are a few complex environments available for this purpose.

Prerequisites

A key point here is to be able to automate installation of both the product to be tested, as well as prerequisites for the product. Example of prerequisites that can be installed automatically is:

- Database drivers

- Third party dependencies

If a prerequisite either takes a long time to install, or is not easily automated, you might select to install this manually and include it in the baseline snapshot. But this means that the environment is then “locked“ and might not easily be used for other purposes.

System Under Test

With the prerequisites installed, the actual product to be tested can then be installed. Based on the product there might be different ways to do this. If you have multiple teams working on different products, consider making a common way of installing each product, in order to make it possible for other teams to install integration test environments.

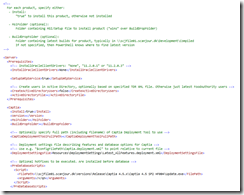

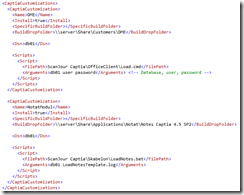

We have solved this by developing PowerShell scripts that reads from a XML file which modules to install. Each team then develops the PowerShell scripts needed for installing their product. Below is an example of XML configuration file used for installing Captia on a test environment:

“Test Data” layer

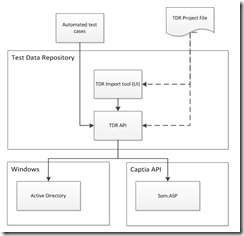

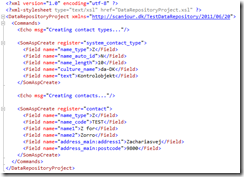

Having installed the product, next step is to apply test data. For this purpose we have developed Test Data Repository, which is able to populate the following types of data:

- Active Directory users and groups

- Exchange mailboxes

- Files

- Test data in Captia (e.g. cases and documents)

As it can be seen from the conceptual overview below, Test Data Repository both has a UI front-end as well as an API.

The UI is used in manual test for populating test data, whereas the API can be used by automated tests for creating prerequisite data for the test and asserting results.

“Customizations” layer

If doing customizations, it’s important that all customizations have similar ways of installing, and are supporting silent install. Many of our customers are having minor or larger customizations, that are installed on top of the standard product.

Automated installation of customizations in test environments are configured in the same XML files used above for installing the standard products. This way the XML configuration file also documents the customers configuration, i.e. version of the standard product and add-ons as well as their specific customization.

The XML snippet above shows a customization consisting of two packages.

“Customization Test Data” layer

If the customer has any special test data, this is defined in one or more XML files, which is populated into the system using the Test Data Repository described above. It’s important to handle a customization as a standard software product, i.e. don’t develop anything special for a customer, but focus on using same structure and tools for all customizations, in order to be able to automate deployment and test for the customizations as well.

The above XML shows test data for a customization, which is applied after the customer solution has been installed in test.

“Tests” layer

With all this we now have a system, optionally with customization, ready for test. Typically deploying an environment with customization takes 30-40 mins including reverting to snapshot, without any user interaction. A huge step forward compared to manually setting up an environment.

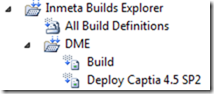

TFS Build Definition

As we’re running TFS, the actual deployment is configured in a build definition

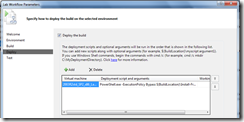

When starting a deployment, you specify which lab environment to use:

And which machines on the environment to deploy to (in this case only the webserver):

Test automation dependencies includes environments, system under test and test data (as well as many other). Ensuring the foundation is in place is a key factor in developing successful test automation, and I hope this post has given some insights on how we have automated deployment and configuration of test environments in ScanJour.