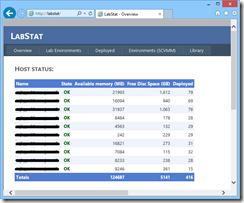

As described in http://rasmus.selsmark.dk/post/2012/09/26/Levels-of-Software-(Test)-Automation.aspx, we have put a lot of effort at ScanJour into automating our lab, currently running approx. 400 virtual machines using TFS 2010 Lab Manager. Running this many machines in SCVMM/Lab Manager is a challenge in itself, which I might come back to in a later post. In order to keep track of all these machines, my colleague Kim Carlsen started out developing an internal website (“labstat”) which displays information about:

- Deployed machines and their state

- Available RAM and disk space on each SCVMM host

- Number of machines deployed per TFS project

- Available disk space in libraries

All of this information is of course available from the SCVMM console, but in order to help the people serve themselves, we are exposing this information to our users. A small part of the site looks like the following:

All of this information is pulled from SCVMM using PowerShell scripts. This sample shows how to get machine details using the Get-VM cmdlet:

Add-PSSnapin Microsoft.SystemCenter.VirtualMachineManager

#VMs deployed and in library

$dt= Get-VM | Select @{N="VmName"; E = {$_.Name}}, @{N="LabName"; E = {([xml]($_.Description)).LabManagement.LabSystem.Innertext}}, owner, status, description, hostname, location, creationtime, @{N="Project"; E = {([xml]($_.Description)).LabManagement.Project}}, @{N="Environment"; E = {([xml]($_.Description)).LabManagement.LabEnvironment.Innertext}}, @{N="Snapshots"; E = {$_.VMCheckpoints.count}}, hosttype, @{N="TemplateName"; E = {([xml]($_.Description)).LabManagement.LabTemplate.innertext}}, Memory | Out-datatable

Invoke-Sqlcmd -query "Delete from VM" -Database $Global:VMMDatabase -ServerInstance $Global:ServerInstance

Write-DataTable -ServerInstance $Global:ServerInstance -Database $Global:VMMDatabase -TableName "VM" -Data $dt

Having approx. 400 machines running, another question that often pops up is “Do we have a test environment with version X of product Y?”. The solution for this is the following page that shows detailed information for each environment (apologize the layout, we’re probably not going to win any design awards for this website…):

The information presented for each environment is:

- Environment name and owner/creator

- “InUse” column simply queries TFS for the “Marked In Use” information, you can set on an environment. The advantage of using this property, is that it can be set without having to shut down the environment, opposed to e.g. changing the description field, which can only be done when environment is not running

- Machines in the environment, both with the internal name (almost all of our environments are network isolated) and OS

- Finally the products installed in the environment

We are storing all of this data in a separate database, which is updated every 10 to 60 minutes, depending on the type of information. The SCVMM data (deployed machines, state etc.) is queried every 10 minutes, whereas the information about installed products is a more time-consuming operation and thus only done once per hour.

In order to access remote registry on the lab machines, the firewall must be configured, which is done using this PowerShell function:

function Initialize-FirewallForWMI

{

Write-Host "Opening Windows Firewall for WMI"

Start-Process -FilePath "netsh.exe" -ArgumentList 'advfirewall firewall set rule group="windows management instrumentation (wmi)" new enable=yes' -NoNewWindow -Wait

}

Using TFS API for getting information about deployed lab environments

Getting details for environments and machines in lab, is simply a matter of accessing the TFS API. One interesting detail here is that we also get the IP address of the machine, in order to make it easier for people to remote desktop to the machines without necessarily having to open the Microsoft Test and Lab Manager client.

// open database and TFS connection

using (SqlConnection cnn = new SqlConnection(databaseConnectionString))

using (TfsTeamProjectCollection tfs = new TfsTeamProjectCollection(new Uri(tfsUrl)))

{

tfs.EnsureAuthenticated();

cnn.Open();

DataAccess.ResetIsTouchedForLabEnvironmentsAndMachines(cnn);

LabService labService = tfs.GetService<LabService>();

ICommonStructureService structureService = (ICommonStructureService)tfs.GetService(typeof(ICommonStructureService));

ProjectInfo[] projects = structureService.ListAllProjects();

// iterate all TFS Projects

foreach (ProjectInfo project in projects)

{

LabEnvironmentQuerySpec leqs = new LabEnvironmentQuerySpec();

leqs.Project = project.Name;

var envs = labService.QueryLabEnvironments(leqs);

// Iterate all environments in current TFS project

foreach (LabEnvironment le in envs.Where(e => e.Disposition == LabEnvironmentDisposition.Active))

{

Trace.WriteLine(String.Format("Project: {0}; Environment: {1}", project.Name, le.Name));

// need to reload in order to get ExtendedInfo data on machines in environment

LabEnvironment env = labService.GetLabEnvironment(le.Uri);

DateTime? inUseSince = null;

if (env.InUseMarker != null)

{

inUseSince = env.InUseMarker.Timestamp;

}

LabEnvironmentDTO envData = new LabEnvironmentDTO

{

Id = env.LabGuid,

Name = env.Name,

Description = env.Description,

ProjectName = env.ProjectName,

CreationTime = env.CreationTime,

Owner = env.CreatedBy,

State = env.StatusInfo.State.ToString(),

InUseComment = (env.InUseMarker == null ? "" : env.InUseMarker.Comment),

InUseByUser = (env.InUseMarker == null ? "" : env.InUseMarker.User),

InUseSince = inUseSince

};

DataAccess.Save(cnn, envData);

// Iterate machines in environment

foreach (LabSystem ls in env.LabSystems)

{

string computerName = String.Empty;

string internalComputerName = String.Empty;

string os = String.Empty;

StringBuilder ip = new StringBuilder();

if (ls.ExtendedInfo != null)

{

computerName = ls.ExtendedInfo.RemoteInfo.ComputerName;

internalComputerName = ls.ExtendedInfo.RemoteInfo.InternalComputerName;

os = ls.ExtendedInfo.GuestOperatingSystem;

if (!String.IsNullOrWhiteSpace(computerName))

{

try

{

IPAddress[] ips = Dns.GetHostAddresses(computerName);

foreach (IPAddress ipaddr in ips)

{

if (ip.Length != 0)

ip.Append(",");

ip.Append(ipaddr);

}

}

catch (Exception ex)

{

ip.Append(ex.Message);

}

}

}

LabMachineDTO machineData = new LabMachineDTO

{

Id = ls.LabGuid,

Name = ls.Name,

LabEnvironmentId = le.LabGuid,

ComputerName = computerName,

InternalComputerName = internalComputerName,

IpAddress = ip.ToString(),

OS = os,

State = ls.StatusInfo.State.ToString()

};

DataAccess.Save(cnn, machineData);

string machineDisplayName = String.Format(@"{0}\{1}\{2}", project.Name, le.Name, internalComputerName);

ExtractPropertiesForLabMachine(cnn, ls, machineDisplayName);

} // foreach machine

} // foreach environment

} // foreach project

// TODO: DataAccess.DeleteUntouchedEnvironmentsAndMachines(cnn);

} // using SqlConnection + TFS

Querying lab machines for information about installed products

My first attempt for getting information about installed products on a remote machine was using “Get-WmiObject -Class Win32_Product” in PowerShell, which works but as described on http://sdmsoftware.com/wmi/why-win32_product-is-bad-news/ has the unwanted side-effect that each MSI product queried on the remote machine is reconfigured/repaired. Because of this, and the fact that it took a long time to repair each product on the machine, I decided to implement this using remote registry access instead, and reading values from “HKLM\SOFTWARE\Microsoft\Windows\CurrentVersion\Uninstall” where Windows stores information about installed applications. Both 32- and 64-bit registry is queried.

The code for accessing the remote registry is shown here:

/// <summary>

/// Populates the products for machine using remote registry access.

/// </summary>

/// <param name="machineName">Name of the machine.</param>

/// <param name="products">Reference to products collection, that will be populated.</param>

/// <param name="registryMode">The registry mode (32- or 64-bit).</param>

/// <returns>False, if e.g. access denied, which means no reason to try subsequent reads</returns>

private static bool PopulateProductsForMachine(string machineName, List<Product> products, RegistryMode registryMode)

{

string registryPath = @"SOFTWARE\Microsoft\Windows\CurrentVersion\Uninstall";

if (registryMode == RegistryMode.SysWow64)

registryPath = @"SOFTWARE\Wow6432Node\Microsoft\Windows\CurrentVersion\Uninstall";

try

{

using (RegistryKey remoteRegistry = RegistryKey.OpenRemoteBaseKey(RegistryHive.LocalMachine, machineName))

{

if (remoteRegistry == null)

return false; // could not open HKLM on remote machine. No need to continue

using (RegistryKey key = remoteRegistry.OpenSubKey(registryPath))

{

if (key == null)

return true; // no error, we just couldn't locate this registry entry

string[] subKeyNames = key.GetSubKeyNames();

foreach (string subKeyName in subKeyNames)

{

RegistryKey subKey = key.OpenSubKey(subKeyName);

if (subKey == null)

continue;

string name = GetRegistryKeyValue(subKey, "DisplayName");

string vendor = GetRegistryKeyValue(subKey, "Publisher");

string version = GetRegistryKeyValue(subKey, "DisplayVersion");

if (!String.IsNullOrWhiteSpace(name))

{

products.Add(new Product { Name = name, Vendor = vendor, Version = version });

}

}

} // using key

} // using remoteRegistry

return true;

}

catch (Exception ex)

{

Trace.WriteLine(String.Format("An exception occured while opening remote registry on machine '{0}': {1}", machineName, ex.Message));

return false;

}

}

private static string GetRegistryKeyValue(RegistryKey key, string paramName)

{

if (key == null)

throw new ArgumentNullException("key");

object value = key.GetValue(paramName);

if (value == null)

return String.Empty;

return value.ToString();

}

Before you can access the remote registry using RegistryKey.OpenRemoteBaseKey() you need to authenticate against the machine, which is done by connecting to the “C$” system share on the machine.

// connect to server

NetworkShare share = new NetworkShare(labMachineDnsName, "C$", @"(domain)\Administrator", "(password)");

try

{

share.Connect();

// get list of all products installed on machine

List<Product> products = new List<Product>();

if (!PopulateProductsForMachine(labMachineDnsName, products, RegistryMode.Default))

return; // failed to connect to remote registry -> don't try any further on this machine

PopulateProductsForMachine(labMachineDnsName, products, RegistryMode.SysWow64);

// filter out products by ScanJour or selected MS apps

var relevantProducts =

from p in products

where (p.Vendor.Equals("ScanJour", StringComparison.OrdinalIgnoreCase))

|| (p.Name.StartsWith("ScanJour", StringComparison.OrdinalIgnoreCase))

|| (p.Name.StartsWith("Microsoft Office Professional"))

|| (p.Name.StartsWith("Microsoft Office Enterprise"))

|| (p.Name == "Microsoft Visual Studio 2010 Premium - ENU")

|| (p.Name == "Microsoft Visual Studio 2010 Professional - ENU")

|| (p.Name == "Microsoft Visual Studio Premium 2012")

select p;

foreach (Product product in relevantProducts)

{

LabMachinePropertyDTO data = new LabMachinePropertyDTO

{

LabMachineId = machine.LabGuid,

Id = "Product",

Value = String.Format("{0} ({1})", product.Name, product.Version)

};

Trace.WriteLine(String.Format("Adding product '{0}'", data.Value));

DataAccess.Save(cnn, data);

}

// Find Oracle version on machine

string oracleVersion = GetOracleVersionForMachine(labMachineDnsName);

if (!String.IsNullOrWhiteSpace(oracleVersion))

{

LabMachinePropertyDTO data = new LabMachinePropertyDTO

{

LabMachineId = machine.LabGuid,

Id = "Product",

Value = oracleVersion

};

Trace.WriteLine(String.Format("Adding product '{0}'", data.Value));

DataAccess.Save(cnn, data);

}

}

catch (Exception ex)

{

Trace.WriteLine("An exception occured while getting lab machine properties:");

Trace.WriteLine(ex.ToString());

}

finally

{

// Disconnect the share

share.Disconnect();

}

As it can be seen from the code, we’re querying for our own products (vendor or name “ScanJour”) and version of Visual Studio and Microsoft Office installed on the machines. We also display which version of Oracle is installed in the environment, again by simply querying for a specific registry key.

Conclusion

In my previous blog post, I described how we have automated deployment of test environments in the lab. In this post we have covered another important aspect of maintaining a large test lab, namely getting overview of state of lab environments and machines. It of course requires some effort to build up an automation framework around your lab infrastructure, but when in place, it has given us the following benefits:

- Minimized manual time used on setting up and maintaining lab environments

- Providing an overview of lab usage for all users

- Increased predictability when setting up multiple environments, since all configuration of environments is automated

- Easier to roll out new changes to base templates, e.g. we have a one Domain Controller template, which is updated e.g. with latest Windows Updates regularly. By automating environments, we more often get “fresh” environments, instead of earlier where it was a manual process to set up a new environment, and therefore not done as often.

Main conclusion here is that you should invest some time in automation for your lab. It certainly does cost some time, but now we have it, I don’t understand how we were able to get any work done in the lab before when we were setting up environments manually