First session today was DEV345 - The Accidential Team Foundation Server Admin, which didn’t quite stick to the subject, but was more about differences between TFS 2010 and TFS 2012 and how to upgrade, and not general information for people who has taken over responsibility of a TFS Server from someone else.

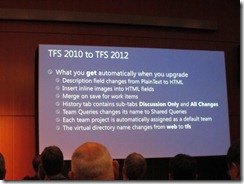

One of the most important notes from this session was to run Best Practices Analyzer Tool for Team Foundation Server, not only once or twice but on a monthly basis. Some other interesting slides on what is automatically enabled when upgrading from TFS 2010 to TFS 2012 (left) and what has to be manually enabled (right):

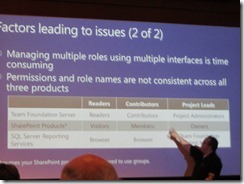

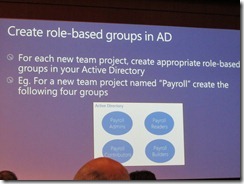

And some slides on permissions in TFS, which is actually split into TFS, SharePoint and SQL Server Reporting Service at the left. The middle slide (apologize for the bad quality) shows the different permission names in the different parts and finally a “pattern” for configuring one AD per role per team project:

This separation of permissions has not been changed for TFS 2012, so it’s still required to set permissions in TFS, SharePoint and SQL Server Reporting Services. http://tfsadmin.codeplex.com/ was presented which might can help setting permissions.

A new feature of TFS 2012 is to create “teams” within a Project, which will the get own product backlog, burndown charts etc. Hope this feature can reduce number of necessary projects in TFS, as projects introduce a lot of limitations on branching, lab environments (which cannot be deployed across TFS projects).

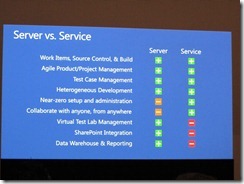

Next I went to DEV340 - Taking Your Application Lifecycle Management to the Cloud With the Team Foundation Service which showed

- Setting up a hosted TFS on http://tfspreview.com

- Creating a new project

- Create a simple MVC 4 solution, check in code and configuring build definition(s) for compiling code on TFS server

- Create website on Azure

- Configure deployment build definition, to deploy your website to Azure

All this was done within approx. 30 minutes, and is basically all it takes for a company to set up the infrastructure for source control, builds, work item tracking and deployment. If I should emphasize one thing from this TechEd conference, this is probably it.

Also learned from this session that the TFS team is working in 3 weeks sprints, and releasing to tfspreview.com after every release, which have given them a lot of really valuable feedback from customers on features to improve. Sounds like a really interesting success story on releasing often to production. And for Microsoft it’s also a big change compared to earlier versions of TFS, where they could only ship new feature with service packs. And that Microsoft have 4 people operating the tfspreview.com site, running thousands of accounts. Of course there is still work to do for the "on-premise" TFS admin, but certainly it sounds there is a potential optimization in costs for running TFS this way.

From what I have seen of TFS 2012 here at TechEd, I must say that the web UI is really impressive and in general it seems Microsoft have improved TFS on a lot of areas, and really hope to be able to upgrade as soon as possible when TFS 2012 has been released.

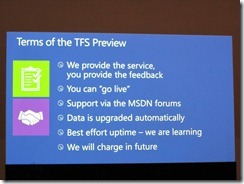

Here are slides on terms for using TFS Preview and currently supported features:

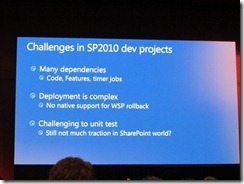

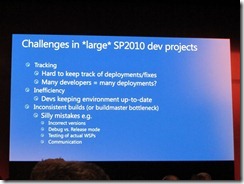

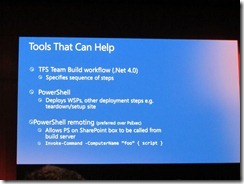

The worst session I have attended so far was OSP432 - Application Lifecycle Management: Automated Builds and Testing for SharePoint projects (if the speaker reads this, I’m sure he agrees  ). First slides started out by showing which problems to solve. So far, so good, but note the right slide here mentioning use of PowerShell remoting for deploying the SharePoint solution to a test environment.

). First slides started out by showing which problems to solve. So far, so good, but note the right slide here mentioning use of PowerShell remoting for deploying the SharePoint solution to a test environment.

This is built into TFS as the build-deploy-test feature, but nevertheless he showed how to customize a build process template in order to execute a PowerShell script.

This is surely not the way to do it, and if you’re a SharePoint developer reading this, make sure to use the standard deployment build process template shipped with both TFS 2010 and TFS 2012. He also showed how to create a CodedUI test using the standard recorder tool shipped with Visual Studio. Nothing new for me there, except the fact that CodedUI tests can be used for automating SharePoint UI tests. And then he did the mistake of editing the UIMap designer.cs file. The designer.cs file is autogenerated by VS (as it says in the top of the file), and should of course never be modified. See the file name in upper left corner of image:

Breaking the post today into two parts, as it has become quite long already. Go to second part